September DDoS incident recap

Between 28th and 30th September, our customers experienced long response times, timeouts, and failing responses from the ConfigCat CDN. Since this was a significant disruption in the ConfigCat service, we want to be upfront about it and share all the information about what happened, what we've done, and what changes we will implement.

What happened? - Timeline

28th September

8:00 AM UTC We started receiving alerts that the CDN nodes are serving an unusually high number of requests. We immediately started adding new nodes to the pool and kept adding in the next few hours to keep up with the demand.

Normally, the traffic is around 3000 requests / sec. The increased load was 600.000 requests / sec, so x200 the average. That later increased to 1.500.000 requests / sec.

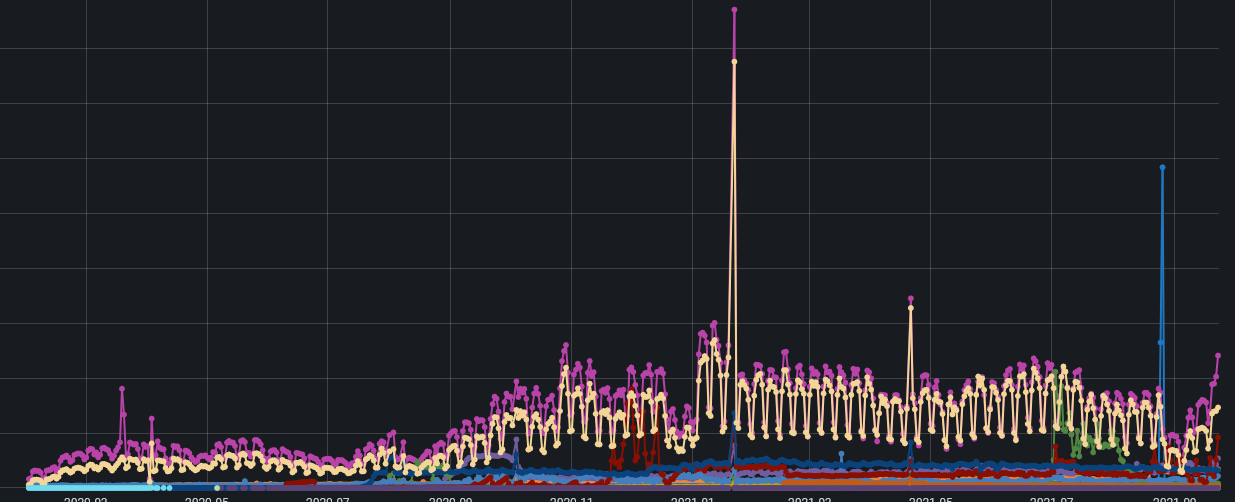

The config JSON download history before the incident.

The config JSON download history after the incident.

9:30 AM UTC We managed to identify the source of the requests as a misconfigured ConfigCat JavaScript SDK built into a Chrome browser extension. Every instance of this browser extension was initializing many new ConfigCat clients with auto polling and was not disposing any of them. And there were lots of browser extensions running all over the world. A great example of an accidental DDoS attack.

We have contacted the extension developer immediately. Due to time zone differences and the long (days) review times before deploying browser extensions, we could not expect a quick solution.

We had two plans prepared to handle such incidents.

We have started to separate the malicious traffic, to make sure the rest of the customers are less impacted. Our goal was to redirect all malicious requests to a dedicated CDN node, so the rest of the nodes are untouched and can function properly.

29th September

3:00 AM The filtering was turned on in the production environment. This resulted in a drop in the number of malicious requests that were still hitting the production CDN.

During the day By refining the filtering system and scaling the CDN vertically, the situation was getting back to normal. Except for a very few number of requests still failing for the rest of our customers, the vast majority was completed successfully.

30th September

The fixed browser extension was deployed. The number of malicious requests started to drop.

What we've learned?

Differentiating legit and malicious traffic quickly was the greatest difficulty that we needed to address.

Actions taken, what do we have now?

By adding Cloudflare services over the ConfigCat infrastructure, today ConfigCat can take 10x the size of the load experienced during the incident. Additionally, our new traffic managers allow us to separate legit traffic from malicious traffic even if Cloudflare fails to do so.

DDoS protection

We have configured DDoS protection to mitigate deliberate attacks on the infrastructure. From now on, this DDoS protection will kick in automatically and ensures a fast response to quick changes in traffic patterns.

Firewall

With firewall rules, we can block or separate traffic on a per SDK key basis. We will use it to manually fine tune which requests are not allowed to reach the actual ConfigCat CDN. This allows us to filter out DDoS traffic that somehow passes Cloudflare's DDoS protection.

DNS-based traffic management

We also added an extra layer of DNS-based traffic managers to handle the traffic that passes Cloudflare. This gives us an extra degree of freedom to filter out malicious traffic in case Cloudflare is down or not working correctly.

Further improvements planned

Re-routing malicious traffic

For a long time, ConfigCat SDKs allowed us to control which CDN-subnet they send their requests to. This is the functionality that allows ConfigCat users today to use EU-based CDN-subnet if they want to be GDPR compliant. In a future DDoS situation, we will leverage this functionality to redirect legit requests to new CDN-subnets that are not affected by the DDoS.

Resilient SDKs

The ConfigCat SDKs must be updated to be more resilient to long network response times and timeouts. This ensures that ConfigCat users will see less impact in case a service outage happens in the future.

Update auto-scaling

We will change how our CDN scales. Today, scaling needs human approval. In the future, we want the scaling to happen automatically, and just allow a human to scale-back manually later. This will improve how fast ConfigCat reacts to similar situations in the future.

Summary

Being in a DDoS situation is never fun. And it's not easy to prepare for one. Thanks to the incident last week, ConfigCat got the chance to test its infrastructure in a real-life DDoS. This has allowed us to draw conclusions, take countermeasures, and prepare for the next one that is sure to come.