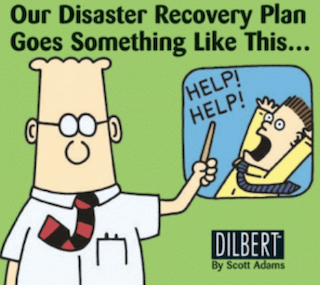

A fable about disaster recovery

Let me tell you a story about the myth of the Friday Release. Newbie QA guy was finishing up his tasks on a Friday evening after hours. The office was quite empty at that time, only a few people left.

Some were wrapping up their overseas status meetings, and some were waiting for their buddies to call before hitting the bar. We had a pretty productive week. Support issues got replied. Bugs have been fixed. Releases have been released. Dashboards were green. All systems were up.

What feature flags could have prevented

Suddenly the Project Manager approaches. She just got off the phone with support and asked if our guy can double-check something. It turns out that the “It is just one line of code and totally safe to release on Friday” feature causes performance issues in the US data centers. The responsiveness of our service is getting worse than overseas users finishing their lunch and getting back to work.

“How bad is it?”, she asked.

“It is pretty bad and keeps getting worse as a new user connects.”

“Can you fix it?”

“Well, I can check what went live today, so we can see more clearly.”

“Can you rollback?”

“I think I can.”

He could not. Made it worse by putting more load on the servers while trying to roll back. Now the service is unusable.

Phone rings. It is the Director of Network Operations shouting “Our dashboards are screaming in red.” and “What the heck are you guys doing there? Get me the Lead Engineer on the phone!”. Luckily it turned out that the Project Manager had his number.

“I am in front of a bar and already had a few drinks.”

“Doesn’t matter, I need you to fix this.”, she screamed.

So the tipsy Lead Engineer told the QA guy over the phone step by step how to build, test and deploy the previous version of our application to the multi-million dollar, 10 geographic locations, each with 20+ servers environment on the fly while actively used by hundreds of thousands of users in less than 4 hours.